Sticky sessions are evil. Ok, I’ve said it. Actually I have said it before. In fact I am surprised at how often I have had to say it. They seem to get used with surprising frequency without properly thinking through the repercussions. I have been asked to explain the issues several times, so let me go walk through and document them.

Background

Before we delve into the issues with sticky sessions, lets start with what they are and why they get used. The HTTP protocol is inherently stateless. Each request to a web server is executed independently without any knowledge of any previous requests. However it is fairly common for a web application to need to track a users progress between pages. Some examples are keeping track of log in information, or the progress through a test with correct/incorrect totals, the items in a shopping cart. We could fill this whole page with examples.

Just about every web application framework that I am familiar with (PHP, Java, .NET, etc) all have a simple way to handle this. The developer just enables server sessions. A session is created for each user and it stores all the transient user data for the web application. The data is typically stored in server memory and looked up on each web request via a session key.

This works great until you have to deploy the application across multiple load balanced servers. Any enterprise level application will always have at least two servers. Even if a single server can handle the expected traffic loads. Primarily for redundancy and maintenance. The Cisco CSS and the F5 Big-IP are popular for load balancing. But the server session is only on a single server, so we have to ensure that the load balancer always sends all re quests from a single user to the same server where their server session is. This is where the sticky sessions comes in.

quests from a single user to the same server where their server session is. This is where the sticky sessions comes in.

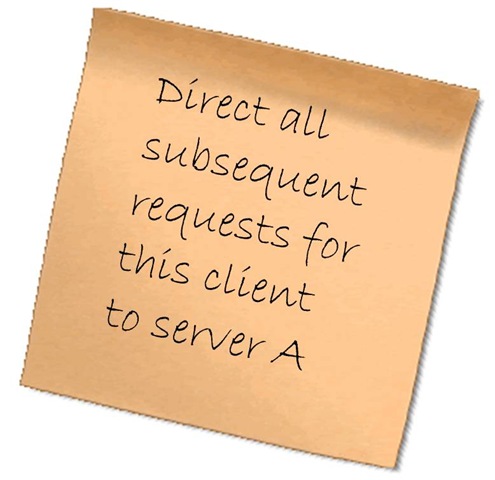

By instructing the load balancer to use sticky sessions, it keeps track of all user requests and which server it has routed their past requests to and sends future requests to the same server. On must load balancers this is a simple option that can be enabled. Sometimes this will also be referred to as server affinity or persistentence-based load balancing. That is obviously an over simplification, but I think you get the idea.

Issues

So this all seems pretty simple and straightforward so far. We are using built in features of the web application framework and standard options on the load balancer. What is the issue? The issue comes in when the load balancer is forced to shift users to a different server. When that happens, all of the user’s session data is lost. The effects of this will vary by application and what the user is doing at the time. Perhaps they get booted back to a login prompt. Or maybe they lose the contents of their shopping cart. Or maybe they suddenly lose their place in the test and are forced back to the beginning. All of these cases make the application appear defective to the end user.

So why might the load balancer be forced to shift the user to a different server even when sticky sessions are enabled? There are actually a number of reason. I’ll list a few of them.

- Load Balancing: The job of the load balancer is to keep the load fairly evenly distributed between all the servers in the pool. Sticky sessioning interferes with that. Depending on user activity, the load can become unbalanced. Eventually an individual server may become overburdened and unresponsive. Then the load balancer detects this, it will begin rerouting some traffic destined for one server to another. It keeps the application up, but the user experiences a glitch.

- Maintenance: From time to time a server has to come down to perform maintenance. This might be patches to the operating system or application server. But it might also be a standard part of deploying application updates. Either way, you must plan to take servers down from time to time. When that happens, the load balancer will shift traffic to remaining servers automatically. But again, the user experiences a glitch.

- Session mis-identification: There are a few scenarios where a load balancer might group multiple users into the same sticky session. The typical way a load balancer associates sessions with a user is through the IP address of the requests. There are alternative ways to set it up, but this is normally the default and is the most unobtrusive. But is it reliable? If requests from multiple users are passing through a proxy server or network address translation (NAT), they may all have the same IP address. Think about all the AOL users passing through a small bank of proxy servers. Or all the users at a company whose computers interface with the Internet via NAT. We use a content delivery network, and so user requests arrive at our origin via an CDN edge node using the IP of the edge node. In any of these scenarios, a group of users are routed to the same server since the load balancer is identifying them all as part of the same sticky session.

- Session identifier changes: Like in the example above, the user’s IP address may be used to identify the user. However there are circumstances where the user’s IP address might change between requests. Perhaps their request passes through a different proxy server, or a different CDN edge node, or DHCP renews their IP with a different one, or maybe it gets assigned a new IP when the user’s device moves from one area to another. All of these cases would be rare, but when it does happen the user just sees a glitch in your application.

- Cloud support: Many cloud providers do not provide for sticky sessions. Amazon S3 only recently announced they were adding this feature to their elastic load balancers. If moving your application to the cloud is something you are considering, then you might not be able to relay on sticky sessions.

I would like to take a minute to walk through some real word examples I have encountered that might shed more light on some of these issues.

Example #1

We had an application that was providing an API for some client based systems. A development team stood up the API application on two load balanced servers, tested, and deployed it. All worked well. After a while someone noticed that the loads did not seem to be even. In fact upon inspection all traffic was going to a single node and the second node was not receiving any traffic. This caused a lot of head scratching, because everything appeared to be configured correctly.

I came in and did my own investigation and found the problem. The user requests where actually traversing two load balancers. The first handled routing the request to the proper application, the second balanced the requests across the two application nodes. The second load balancer was instructed to use sticky sessions based on IP. Unfortunately all requests it was receiving all came from the primary load balancer. So they all had the same IP and therefore were all assigned to a single sticky session.

In this case I worked with the development team to remove the need for sticky sessioning and disabled this feature on the load balancer. Fortunately we caught this problem before the application traffic rose to a point where more than one server was needed.

Example #2

In another case, we had an API providing data for a mobile application. The way the application was written, it required the API to maintain a user session on the server. Therefore sticky sessions were enabled. Shortly before the application launch, the API code was moved into production and final quality assurance testing took place. The QA team begin to report intermittent glitches that had not appeared in earlier testing. The state information would periodically be lost. This did not happen frequently, but they were able to document multiple instances.

The development team immediately expected a problem with the sticky sessions. A quick check and it was confirmed that the feature was enabled however. No issues were found with the application code itself. It took further investigation until finally it was found that the IP addresses of the requests would periodically change.

A conference with the mobile application developer was initially unable to explain this behavior. They had to do more digging before eventually turning up the cause. It turned out that the framework used by the mobile application passed all requests up to a centralized system which then made requests out to our API servers when it needed data. Therefore we were seeing the IP addresses of these framework servers, and not of the user’s mobile device as expected. From time to time, the server handling the users requests would change which would break the sticky sessions. This problem caused a significant delay in the product launch while the application was reworked

Example #3

One of our teams added a captcha challenge to an application. The users would have to pass the captcha challenge before the API would process their requests. Before adding the captcha, the application did not need to use sticky sessions. The team dropped in a simple captcha component. It would generate a challenge/response, store it in a server session, return the challenge to the user. The user would respond with the correct response (or the wrong one) and the users response would be checked against the one stored in the server session. Since server sessions were now being used, enabling sticky sessions was done on the load balancer.

There actually were no major problems with this. The sessions only lasted a minute or two typically so very few users (if any) would be affected when load was shifted from one server to another. And when a user was impacted, their captcha would fail, and they would get a new one. So the user just assumed they mistyped the correct response and would just try again (hopefully successfully), not that the application was malfunctioning. The use of sticky sessions did cause a little bit of uneven load balancing, but this was minimal due to the short user interaction with the service.

In this instance the time and cost to put a different method in place may not be warranted. Any issues from it were rare and did not cause major user frustration.

Summary

The use of simple server sessions tends to be taken for granted and developers just assume that enabling the use of sticky sessions on their load balancers is perfectly safe. Typically it will work fine most of the time. But when it doesn’t it can take a significant amount of time to track down the issue. An application may have infrequent reports of glitches that a can not be reproduced.

Sometimes configuring your load balancer to use a non default sessioning method will fix these problems. In fact in my first example above we could have reconfigured the load balancer to use a cookie based method. Possibly the second as well. Some load balancers support injecting their own session cookie into your HTTP traffic which it uses to track user sessions. Some may also support checking on an existing application cookie (e.g. JSESSIONID, PHPSESSID, ASPSESSIONID). This last option is better if supported. These methods only help with some of the problems associated sticky sessions however.

If your application requires 24/7 availability and high user quality I strongly suggest staying away from sticky sessions. There are a number of ways to enable you application to use sessions that do not require the user to always visit the same server. Illustrating those methods is beyond the scope of this post however. If however you still require sticky sessions, look into configuring it to use a non IP based sessioning method.

Enough of my ranting. Hopefully now you will think carefully before you enable sticky sessions on your load balancer. And if you still use it, at least you are more aware of the consequences.

Thats wonderful information…thanks for that

Why session replication across clustered servers is not solving the problem that you reported?? Any idea?

Excellent. Thanks a ton!

Thank you for your sharing. You used simple explaination and good example. Really help me a lot.

I\’m glad you found it helpful.

Nice explanation indeed. 🙂

Sticky session is it sliding or static?

Great Post !

really good explanation 🙂

good job

Good job man! Thanks for your helpful work!

Really well-written and helpful post. Thanks!

Thanks for this great article.

I was reading on sticky sessions and the IP based stuff for creating sticky sessions. I imagined some of the problems you listed here while reading stuff on sticky sessions and I was wondering if I am missing something. Thanks for validating my suspicions :).

Depending on your traffic you can set the balancer to a specific server for subsequent events. This will allow your default sessions to work.

Otherwise your sessions server side need to be set available (obviously) to all web servers just like images etc. setting up a cache per we server will not communicate the sessions to each other. If your using a separate database server you can use database sessions.

I've used a separate database in the database server for them with great results.

Thanks a lot. It really helped me understanding a similar issue in my application.

Yes, a PHP session is "session state". And yes, often that session state is stored on disk. That's actually the problem. If you are trying to scale out, then you will have multiple systems. Unless you have a shared disk, then the other systems won't have access to that state.

And lets's say you do have a shared disk or other shared resource like a database. If you grow to a very large scale, then that database itself needs to scale out. So people start clustering their databases.

Often that is fine and works for many sites. But for some sites, even that is not enough scale. If you can avoid session state, that's a great thing.

In my experience, however, most web sites just use session state, and it's actually fine. I've gotten a little more mellow about this as I've gotten older 🙂

I just realizing this is so good information.

Good information and related to topic.

Thanks

Thank you for sharing….

The examples are very good, Thanks for sharing

I simply wanted to write down a quick word to say thanks to you for those wonderful tips and hints you are showing on this site.

"Dotnet Training in Marathahalli"

Use a database to store the session information. That will solve most of the problems you've listed. And if the site scales so that a database isn't enough you probably have larger issues to solve.

Honesty, I don't see sticky sessions are evil, just they way you are using in your example are evil.

Reading your article is the same as saying, java would cause outofmemory, so java is evil.

You should consider using a different approach, i.e. application-controlled session instead of Duration-based sessions (don't let the load balancer manage the session based on an IP address or load-balancer generated cookie).

Thanks for the post. We recently moved from Tomcat session mgmt to Spring Sessions with Redis storing the session info on another server. We have 2 nodes in our cluster. We used to use sticky sessions, but with the move to Spring we figured we didn't need them anymore, but the captcha wasn't working for our "forgot password" utility.

We hope to move our application to a cloud soon, so we would like to not use sticky sessions. Do you have any suggestions on how to get around this? Can the JCaptcha challenge/response be stored in a node agnostic way?

Thanks for the post.. very useful